Original Paper Reference: Improving Robustness Without Sacrificing Accuracy with Patch Gaussian Augmentation

这篇文章提供了另一种数据增强的可能方式,将 gaussian 和 patch 结合,达到在不损失准确性的效果下提升模型的鲁棒性。

Introduction

论文这两段关于深度学习鲁棒性研究的总结值得一读:

Research in neural network robustness has tried to quantify the problem by establishing benchmarks that directly measure it and comparing the performance of humans and neural networks.

Others have tried to understand robustness by highlighting systemic failure modes of current learning methods. For instance, networks exhibit excessive invariance to visual features, texture bias, sensitivity to worst-case (adversarial) perturbations, and a propensity to rely solely on non-robust, but highly predictive features for classification. Of particular relevance to our work, Ford et al show that in order to get adversarial robustness, one needs robustness to noise-corrupted data.

Another line of work has attempted to increase model robustness performance, either by directly projecting out superficial statistics, via architectural improvements, pre-training schemes, or through the use of data augmentations. Data augmentation increases the size and diversity of the training set, and provides a simple method for learning invariances that are challenging to encode architecturally. Recent work in this area includes learning better transformations, inferring combinations of transformations, unsupervised methods, theory of data augmentation, and applications for one-shot learning.

Preliminaries

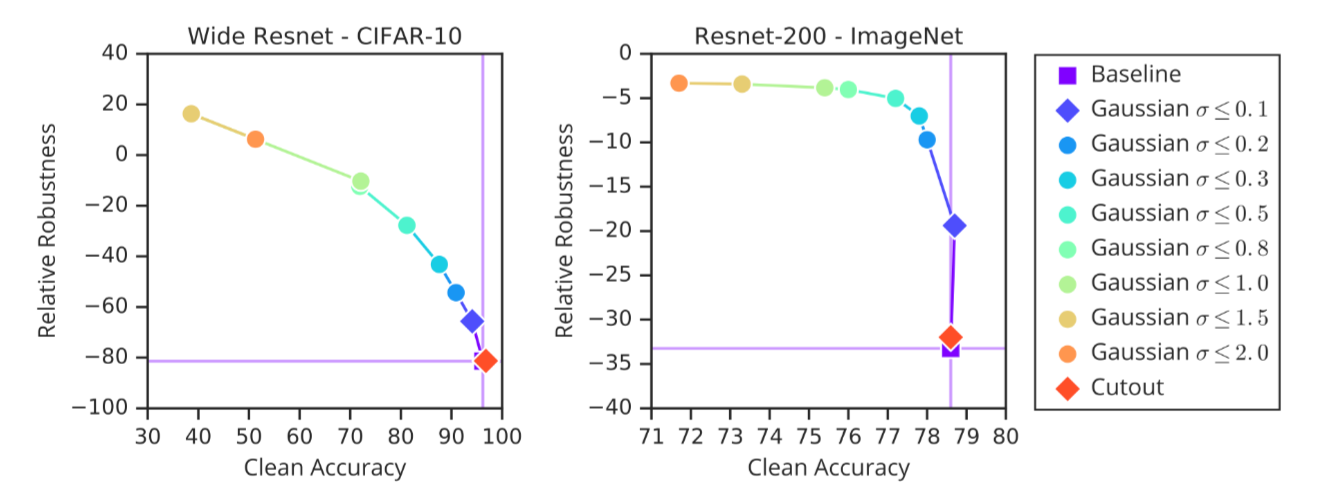

这部分内容主要是对论文借鉴对两种方法进行说明,Cutout and Gaussian。

The former sets a random patch of the input images to a constant (the mean pixel in the dataset) and is successful at improving clean accuracy. The latter works by adding independent Gaussian noise to each pixel of the input image, which can increase robustness to Gaussian noise directly

Cutout and Gaussian augmentations 在鲁棒性和准确性之间的平衡妥协状况。说实话,这个图我没怎么看明白,也不能理解文章单独这一章节的意义和必要性在哪里。

Method

这里第一部分是介绍 Patch Gaussian 方法的实现方式,个人感觉本质上是上述两种方法的缝合。

第二部分介绍相关超参数的选择,同时给出了论文里要研究的鲁棒性的定义:

Here, robustness is defined as average accuracy of the model, when tested on data corrupted by various σ (0.1, 0.2, 0.3, 0.5, 0.8, 1.0) of Gaussian noise, relative to the clean accuracy. This metric is correlated with mCE, so it ensures model robustness is generally useful beyond Gaussian corruptions. By picking models based on their Gaussian noise robustness, we ensure that our selection process does not overfit to the Common Corruptions benchmark.

计算方式如下:

第三部分是数据集及实现细节相关的内容介绍。

Results

这部分的内容组织的方式值得学习,标题部分是直接描述实验结果的效果,第一部分为:

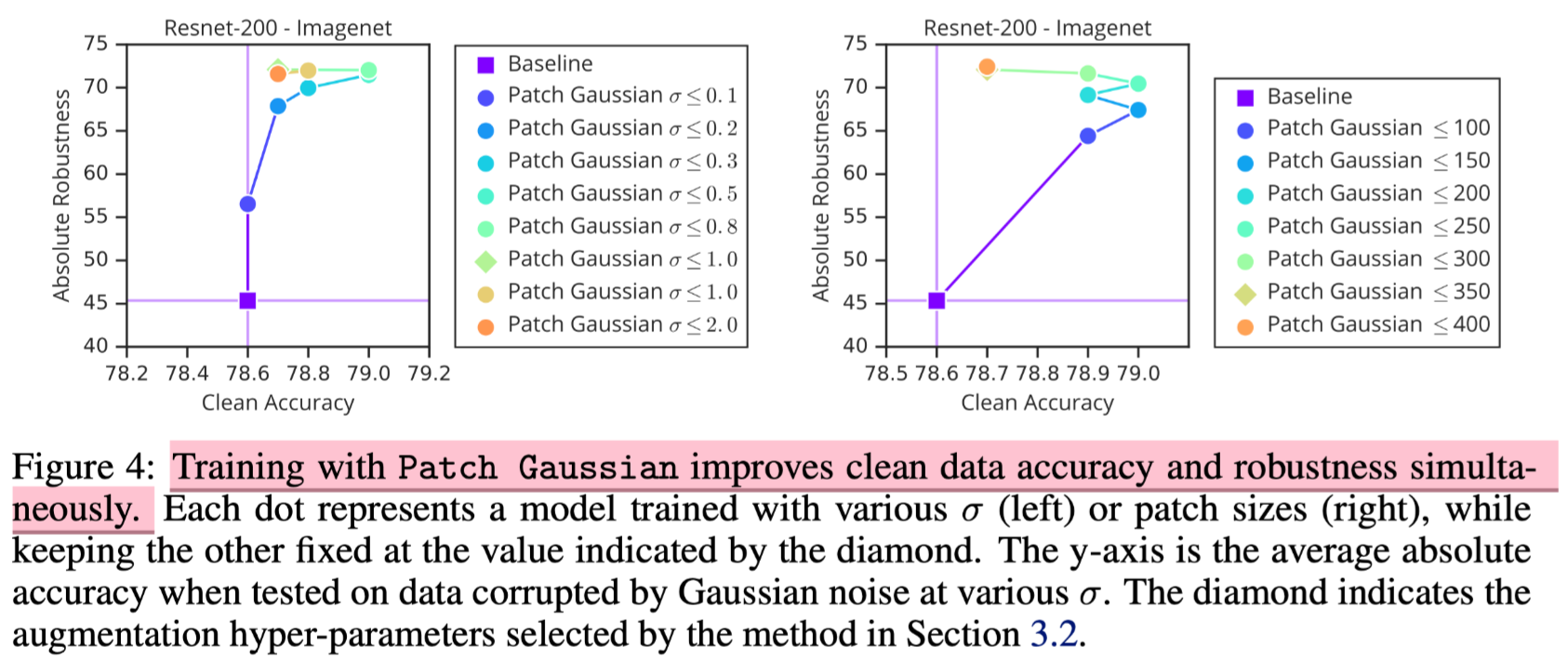

Patch Gaussian overcomes this trade-off and improves both accuracy and robustness

即实验证明可以克服准确性和鲁棒性的妥协,实现不损失准确性的情况下提高鲁棒性。

说实话这文章的图对我来说都很难读,包括上面这幅。

第二部分:

Training with Patch Gaussian leads to improved Common Corruption robustness

说明Patch Gaussian可以提高在常见损坏图像数据集的鲁棒性表现。

对于标准数据集的记录如下:

The Common Corruptions benchmark: This benchmark, also referred to as CIFAR-C and ImageNet-C, is composed of images transformed with 15 corruptions, at 5 severities each. The corruptions are designed to model those commonly found in real-world settings, such as brightness, different weather conditions, and different kinds of noise.

第三部分:

Patch Gaussian can be combined with other regularization strategies

首先与另外几种正则化方式进行对比:larger weight decay, label smoothing, and dropblock,其结论是:

find that while label smoothing improves clean accuracy, it weakens the robustness in all corruption metrics we have considered. This agrees with the theoretical prediction from, which argued that increasing the confidence of models would improve robustness, whereas label smoothing reduces the confidence of predictions. We find that increasing the weight decay from the default value used in all models does not improve clean accuracy or robustness.

同时论文将Patch Gaussian和其他正则化方法联合使用,结论是是可以进一步提高模型效果。

第四部分:

Patch Gaussian can be combined with AutoAugment policies for improved results

第五部分:

Patch Gaussian improves performance in object detection

Discussion

讨论部分主要从基于频率分析的角度解释文章提出的增强方法为何表现较好。

具体处理步骤为:

First, we perturb each image in the dataset with noise sampled at each orientation and frequency in Fourier space. Then, we measure changes in the network activations and test error when evaluated with these Fourier-noise-corrupted images: we measure the change in ?2norm of the tensor directly after the first convolution, as well as the absolute test error. This procedure yields a heatmap, which indicates model sensitivity to different frequency and orientation perturbations in the Fourier domain.

阅读总结

这篇文章乍看之下,觉得还不错,但是仔细审视却觉得创新性确实有待商榷,将文章第二章中的两种方式进行缝合拼接,得到新的方法,并证明了这方法的有效性,论文的实验工作和分析工作值得学习。